Hello,

I’ve been trying to capture pixel-perfect video of my vintage DOS PC for archival and instructional purposes. Like most (but not all) monitors, my AV.io HD incorrectly auto-detects the 320x200 video signal as 720x400 scan-doubled text mode. I know this is normal behavior, but it introduces artifacts into the signal as it adds those extra 80 lines and scales it to 720.

I feel like at some point I had it working correctly, that the signal correctly came in at 320x200 (or perhaps it was interpreted as 640x400.) But For now, at least, it always sees it as 720x400.

Is there a way to disable auto-detect of the signal and manually put in the signal parameters?

Hello,

Based on your description of a vintage DOS PC I assume you’re using VGA as the signal source? The lowest supported capture resolution on the AV.io HD for VGA is 512x512 @ 60Hzs, so unfortunately we could not say what the exact behavior will be when attempting to capture a 320x200 signal, it has not been tested.

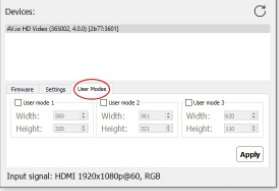

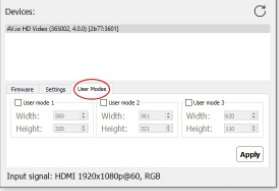

However, you can use the AV.io configuration tool to define a custom user mode which would not affect the ingested resolution, but it would present that resolution/mode to capture software as an option and hopefully prevent it from being scaled to other, less desirable resolutions. If the signal winds up coming in at the wrong aspect ratio, you could always correct it after using video editing/post software.

The first step would be to see what resolution the AV.io config tool is actually reporting for the incoming signal. This is the ingested/detected mode and cannot be changed. Based on that you can try and define some user modes which will hopefully allow you to capture the signal without artifacts. There is a 640x400 mode for VGA on the AV.io HD so this would probably be the ideal to aim for as it shouldn’t change the aspect ratio, but it will be based on what the AV.io is detecting for the incoming. You will probably have to do some trial and error testing with various user modes until you get an acceptable result.

Best of luck!

Afterthought: For some reason I remember seeing the AVio correctly reporting an incoming signal of 320x200 at some point, then debating on whether or not I wanted to adjust the picture to 640x480 (as it would have been done on a CRT) or to leave it at 640x400. I’m going to re-examine my setup to see if something obvious has changed, or if turning on devices in a specific order makes a difference, etc. But I’m going to leave the below info anyway as it may still be relevant.

Yes, VGA cable direct to the AVio.

I’ve already been doing the custom resolutions in an attempt to get a reasonable picture. Oddly, if I do 640x400, it always shows up as 640x386 in my capture software for some reason, so I have to do 1280x800. However, the issue is that it’s trying to add that extra 80 lines for 720, then squishing it back down, so it’s creating visual artifacts as if the convergence is off (visible wavy vertical lines every few 10 pixels or so.)

The timing for 360x200 text mode and CGA 320x200 is almost identical. Most LCDs interpret it as text mode, so they scan-double the text mode to 720x400 so it’s pixel perfect on an LCD screen (older CRTs and LCDs like my original IBM ThinkVision can differentiate between 360x200 and 320x200.)

I was hoping that there was a way to force the input signal to 640x400 so the AVio doesn’t assume it’s scan-doubled text mode at 720x400, thereby eliminating the artifacts introduced by scaling it to 720 and then back down to 640.

I don’t know if this is addressable via the firmware for it to look for a 320x200.

Incase anyone is wondering, I never figured it out.

If I set a custom User Mode of 320x200 or 640x400, I just get a black screen or a highly distorted picture.

OK, I found a workaround. Or rather, I’m willing to bet this was how I had it before I futzed with it. I enabled the “Preserve Aspect Ratio” option and that seems to do the trick. I don’t know why I didn’t notice that option before.

So now I just set it to the closest resolution either vertically or horizontally on my video capture, and it’ll just add black bars. Which is perfectly fine, I can trim that area ezpz in post. It also works best because in DOS its constantly changing resolutions depending if I’m in the BIOS, DOS prompt, 3rd party DOS shell, and

Only problem is that OBS won’t recognize my custom resolution timings.